Defence capability future needs

A new concept to embrace autonomous systems

Military operations cannot be immune to the progress of automation and artificial intelligence evident in other areas of society. AI has often been framed in the news as human-adversarial. People continually hear of “progress” in terms of machines defeating humans, such as playing Go and the spectre of taking our jobs. Despite this adversarial framing of AI and autonomy, true strategic advantage lies in shifting the paradigm to one of collaboration. Kasparov, once defeated by Deep Blue, now advocates Centaur Chess, a form where human-machine teams compete. The world champion in chess today is not a machine alone, but a human-machine team. For the ADF, this signals the need to evolve the concept of network-centric warfare, to include AI decision-makers embedded in unmanned platforms, and, as decision aids in manned platforms that are highly integrated with human decision-making. Such a concept was first developed in 1999, extended in 2005, and forms a significant part of DST programs today.

Agile Capability Development

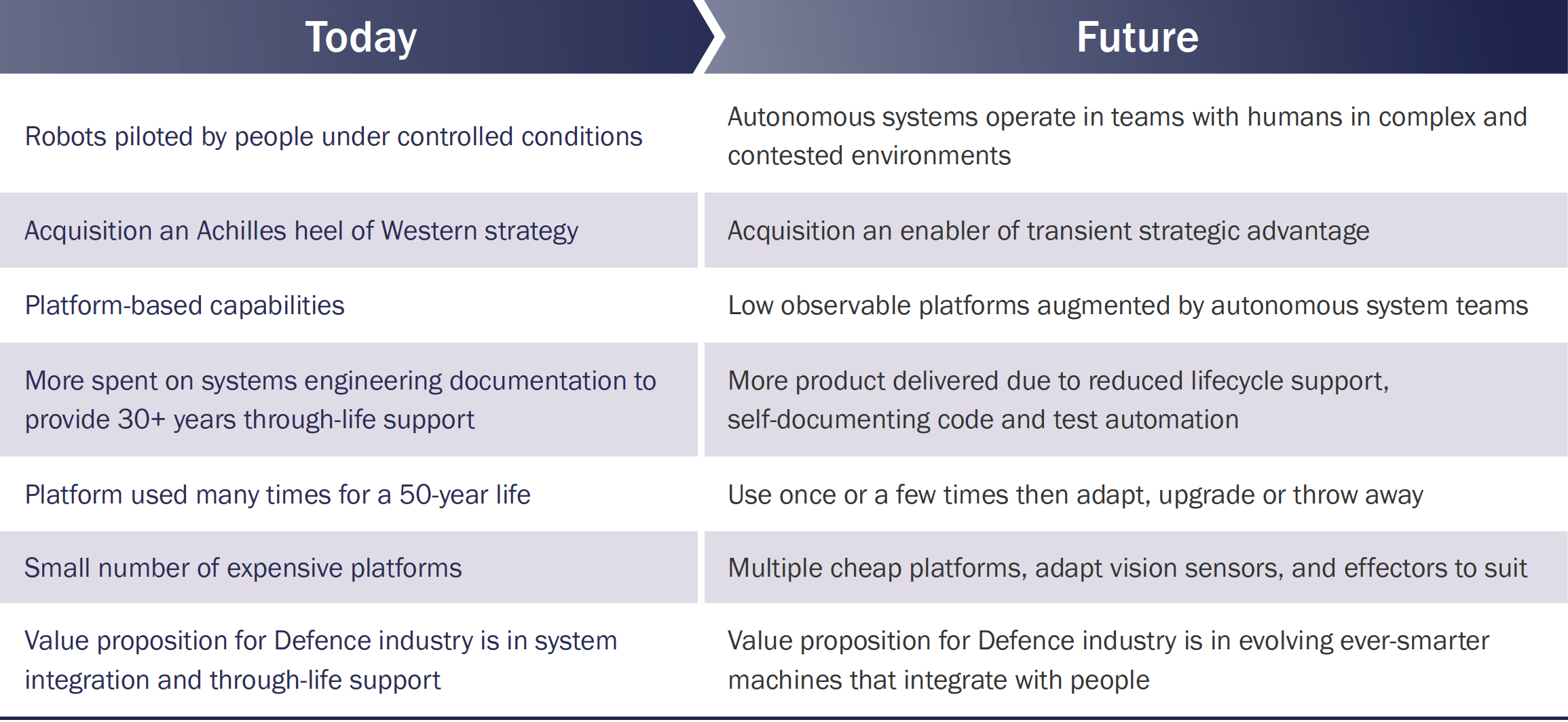

To deliver on the transient capability edge requires rapid pull through of ground-breaking research into an integrated system for rapid deployment. This requires agile development of software and hardware systems. The following table provides some contrasts of expected changes.

The Agile approach, in sympathy with the need for testing trusted autonomous systems in emulating real-world conditions, requires significant and well-instrumented test ranges to be developed that can work with ADF elements as needed. Australia offers a strategic advantage to the world in this respect, due to having ‘big’ air, maritime, and land spaces to conduct such tests. In partnership with Australia’s innovative regulatory authorities, the potential to build a strong body of evidence of the reliability of these systems will be important to future product certification. Of course, this must be underpinned by new technologies for assurance. Improved intentional systems including multi-agent technologies means that mission goals and plans may be adapted in software. The verification of the trustworthiness of machines that think and reason more like humans will require new certification methods.

Trusted autonomous systems in the Defence Integrated Investment Program (IIP)

The following tables map the planned Defence capabilities to opportunities for trusted autonomous systems drawn from the public 2016 Integrated Investment Plan. This opportunity totals over $AU200 billion and, despite trusted autonomous systems being a small component of this in terms of cost, the numbers are staggering, as is the potential breadth of impact of these technologies.