Technology needs and goals

The trusted autonomous systems necessary for achieving Defence missions require research, development and fielding of systems under realistic conditions. Considering that the scope of trusted autonomous systems is machines (potentially embodied as robots), humans and their integration, seven principles for future Defence systems are advocated. All seven principles are based on a foundation of adaptation. Each of the seven principles may be applied to some extent, ranging from homogeneity and centralisation at one extreme, to heterogeneity and decentralisation at the other, determined according to operational dynamics, complexity and perceived risks. The seven Trusted Autonomous Systems principles are:

- Ability to devolve decision making: a single human decision-maker or a centralised decision cannot always be afforded. Autonomy is founded on this principle.

- Capacity for ubiquitous decision-making capability: a similar and significant capability for decision-making on (potentially) every military platform that can run software, which may span in scope from missiles through to headquarters, allows scalable mass and graceful gradation of decision-making capability.

- Automated decision aids and automated decision makers: is the primary means by which the potential for ubiquitous autonomous systems is achieved as a force multiplier.

- Human-machine integrated decision-making: offsets machine weaknesses with human strengths, and human weaknesses with machine strengths to achieve systemic robustness. It aims to enable the human to be the hero and ameliorate the hazards.

- Capacity to be distributed (physical) and decentralised (intent): allows for graceful degradation to precision strike, and a diversity of alternate mission goals in the face of compromise to systemic integrity. This extends the principle of Mission Command in the ADF to include trusted autonomous systems.

- Socially coordinated: allows unity of human and machine decision and action, with dynamic command and control structures rather than fixed ones, through social contracts and agreement protocols.

- Managed levels of operation: constrains the binding of social agreements around self-control, common location, common intent, and cooperation/competition given available resources.

From this follows three core research areas:

- Machine Cognition: autonomous decision aids and autonomous decision makers to achieve self-determining and self-aware systems.

- Human-Autonomy Integration: human-machine integrated systems, human-machine and machine-machine social coordination, and managed levels that include human-autonomy teaming to provide unity.

- Persistent Autonomy: adaptation of automation subject to fundamental uncertainty to achieve self-sufficient and survivable systems.

In turn, the technology needs related to these research areas include:

Machine cognition

- Sensor fusion (machine sensation) – machine learning and data-driven techniques, to integrate multiple Defence and commercial sensor technologies including vision sensors, improving target acquisition.

- Object fusion (machine perception) – machine learning and AI techniques to detect and track objects in volumes of time and space, and attribute the information that characterises them, improving automatic target recognition.

- Situation fusion (machine comprehension) – AI techniques to detect and track situations based on multiple objects and their relationships as meaningful symbolic representations described in language. This allows machines to identify their operating context, to improve perception and apply the appropriate control routines.

- Scenario fusion (machine projection) – AI techniques to detect and track projected ‘future worlds’ described in language. This allows machines to autonomously identify threats, warnings and opportunities and prepare to take action.

- Integrated resource management and replanning – tightly couples allocation of resources including own effectors, adapt active sensor state, according to risk and conduct online, dynamic planning to form appropriate automated behavioural control responses.

- Agent theory of mind (others minds and own) – enables machines to represent and reason about their own ‘mental’ state and those of others, including humans, to allow them to treat them appropriately, rather than as non-intentional environmental objects.

- Intentional systems – representation of cognitive elements in machines, including beliefs, desires and intentions to improve human explainability and traceability of machine state.

- Semantic learning systems – machines that learn context and meaning without the need for exhaustive semantic engineering.

- Autonomous knowledge acquisition – machines that acquire knowledge autonomously that is directly applicable for use with its semantic language constructs.

Human-Autonomy Integration

- Agreement protocols – support binding agreements between humans and agents, to unify intent and capability (e.g., plans) that are lawful and accountable.

- Interaction models, systems, and technologies – develop models to understand and improve human engagement and performance with autonomous systems, including mixed initiative.

- Reciprocal trust and reputation – technologies to provide appropriate transparency, confidence, feedback and reputation to improve human trust in machines where appropriate and convey when not to trust.

- Narrative and story-telling – explain machine state, actions and reasoning to humans at all levels of fusion and planning to improve human awareness and control.

- Emotion recognition – technologies to identify, characterise and track human emotional state to adapt interfaces and interaction modes and improve total performance, including through mixed initiative strategies.

Persistent Autonomy

- Assurance – new approaches to verification and analysis for adaptive and learning systems to advance integrity and trust certification in autonomous systems.

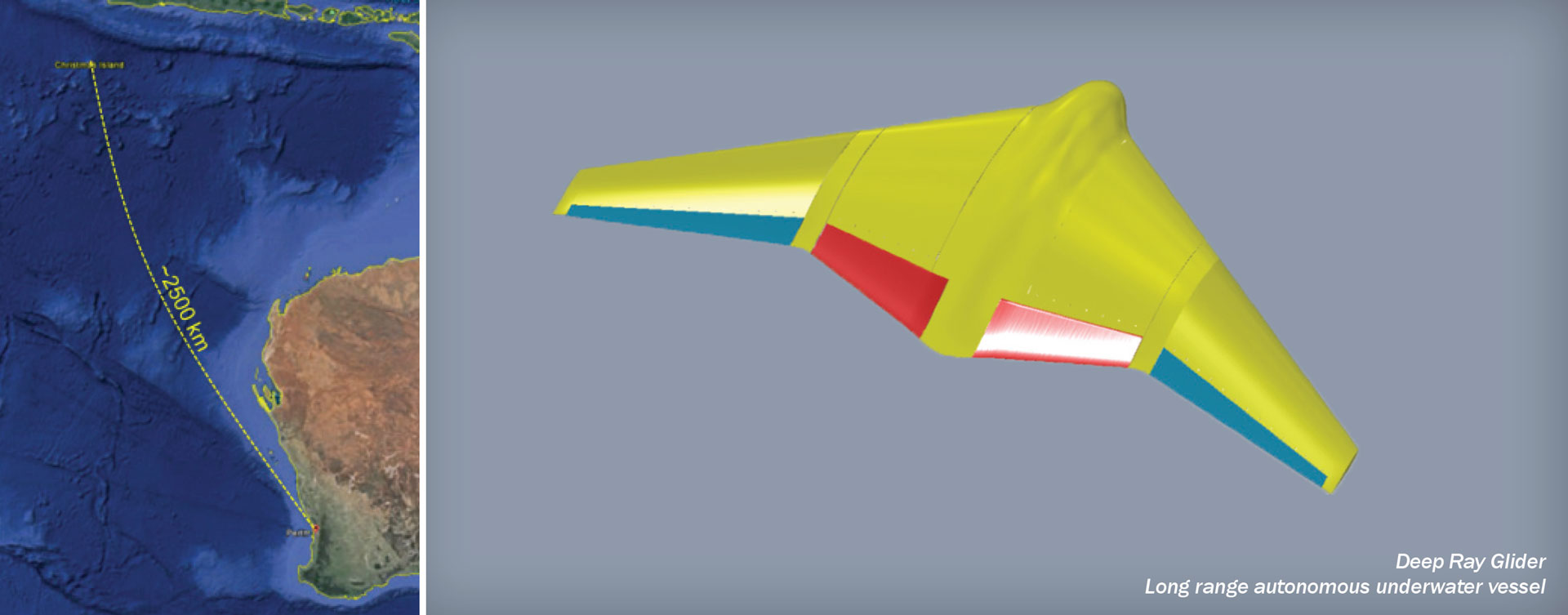

- Viability maintenance – strategies to improve robot survival in dynamic environments over large volumes of time and space.

- Evolutionary control – determines new control laws under conditions of unpredicted degradation.

- Self-organisation and self-healing – technologies to assist individuals and groups adapt and survive.

- Novel and low cost sensors and actuators – capabilities that permit new forms of processing that significantly reduce size, weight and power and may be used ubiquitously.

- Low observable techniques – to reduce signatures of autonomous systems in all environmental domains.

- Multi-domain systems – robotic and vision systems capable of operating across land, sea surface, sea sub-surface, air and space domains.

- Alternate navigation and timing – means to provide accurate and low-cost navigation in complex environments where satellite-based global position systems may be denied or unavailable.

- Alternate communications – means to provide survivable and low-observable communications for multiple autonomous systems operating in complex and denied conditions.

- Moral machines – technologies to enhance system compliance with international humanitarian law and encode those laws and rules of engagement in autonomous systems to identify and protect non-combatants and symbolically-marked objects.